While many hackers exploit Generative AI (GenAI) to craft context-aware phishing emails, cybersecurity defenders are actively exploring how to leverage GenAI for good. However, several challenges must be addressed:

- Computational resource constraints of large language models (LLMs)

- The inability to tolerate hallucinations

- Safety and privacy concerns

Introducing RidgeGen: A Lightweight, Secure GenAI Solution

RidgeGen is a lightweight, efficient on-board service module that integrates GenAI into RidgeBot, our flagship security platform, ensuring seamless AI integration. Its first application focuses on Personally Identifiable Information (PII) detection, where it achieves 99.6% accuracy using a specialized, domain-trained model. Moreover, because RidgeGen operates entirely locally, it ensures that no private data ever leaves the RidgeBot platform, addressing critical security and privacy concerns.

Addressing Key Challenges in GenAI-Powered Cybersecurity

1. Managing Computational Resource Constraints

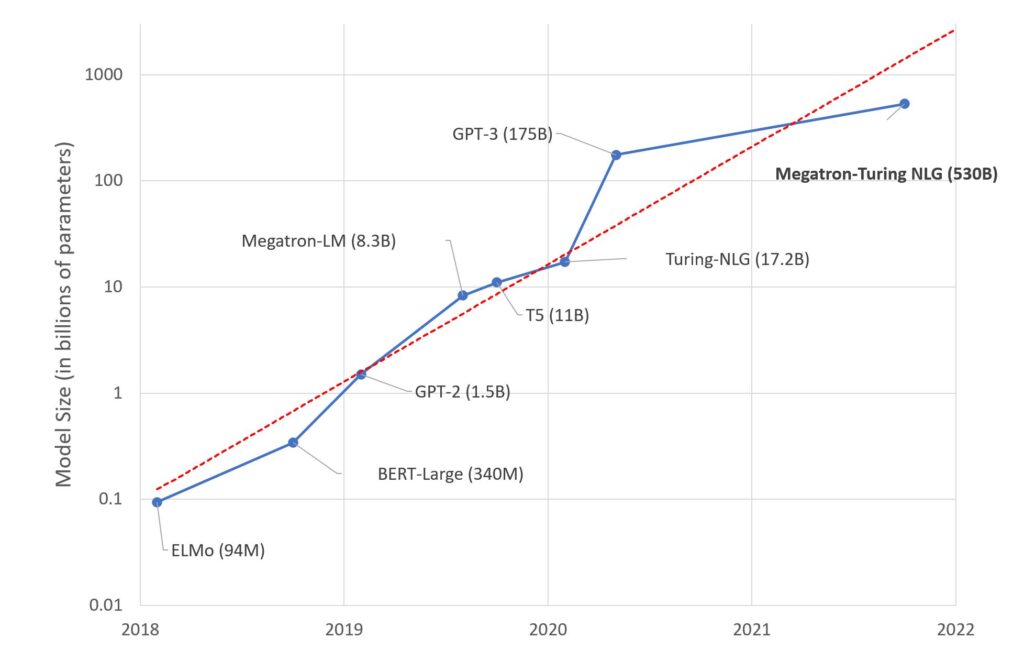

AI models require substantial memory and processing power. As illustrated in Figure 1, the number of model weights in AI systems is growing rapidly. For example, BERT-Large—a close comparison to our internal model—requires approximately 1.2 GB of storage when compressed. Larger models, such as GPT variants, contain billions of parameters, demanding significantly greater computational resources.

To ensure efficient inference speed, all necessary data must be loaded onto the host machine. However, inefficient model management can lead to system overload and degraded performance. Unlike conventional applications, AI models cannot be arbitrarily loaded and unloaded without impacting operations. Instead, a structured monitoring system must dynamically manage model availability and request distribution, optimizing both efficiency and resource allocation.

2. Tackling Hallucinations and Ensuring Accuracy

One of the primary challenges in deploying AI for cybersecurity is the risk of hallucinations—incorrect or fabricated outputs that could mislead security professionals. Inaccurate information in security testing can result in:

- False positives, leading to unnecessary investigations

- Misconfigurations, potentially weakening security defenses

- Overlooked vulnerabilities, exposing systems to real threats

RidgeGen mitigates this risk by incorporating a specially trained AI model, built for precision in security applications. By leveraging domain-specific data and rigorous validation processes, RidgeGen significantly reduces hallucinations while maintaining 99.6% accuracy in PII detection.

The NER benchmark results below demonstrate that RidgeGen outperforms ChatGPT and other models:

- Out-of-domain NER tasks: RidgeGen achieves an average score of 55.7, leading LLMs like ChatGPT by 15 points and scoring higher than GoLLIE.

- In-domain NER tasks: RidgeGen achieves an average score of 47.8 across multiple datasets, outperforming models such as ChatGPT and UniNER-7B.

3. Ensuring Security and Privacy with a Local-First Design

Security and privacy concerns are paramount when integrating AI into cybersecurity platforms. Many large-scale AI models rely on cloud-based processing, which introduces risks related to data leakage, compliance violations, and third-party dependencies.

RidgeGen is built as a fully on-device solution, ensuring absolute data security by preventing any sensitive information from leaving the RidgeBot environment. This local-first architecture aligns with strict security policies while giving users full control over AI operations.

RidgeGen: An Optimized Solution for Secure and Efficient AI

RidgeGen is a dedicated service module that optimizes AI model management, request governance, and resource utilization within RidgeBot. Its key functionalities include:

- Dynamic model loading and unloading to balance performance and efficiency

- Centralized governance to enforce AI model usage policies

- Localized, high-accuracy models tailored for cybersecurity, eliminating reliance on external servers

By integrating RidgeGen, RidgeBot gains powerful GenAI capabilities while maintaining high efficiency, security, and accuracy.

Closing Thoughts

The rise of Generative AI has unlocked transformative possibilities, enabling advanced semantic understanding and autonomous content generation. In cybersecurity, AI-driven automation is enhancing both efficiency and accuracy in security testing.

The RidgeBot 5.2 platform release introduces RidgeGen alongside an upgraded PII detection plugin, which leverages a specialized AI model to significantly improve detection accuracy. As we continue to refine RidgeGen, expect new generative intelligence capabilities to further enhance RidgeBot’s security functions.

Stay tuned for more updates as we advance AI-powered cybersecurity solutions!