What is Ollama?

Ollama is a free, open-source application that allows companies to run AI models locally on their own hardware rather than using cloud-based services. Its user-friendly design and wide compatibility with different computer systems have made it incredibly popular, with its user base growing by 46% in just three months. As a relatively new project, it has gained more GitHub stars than PyTorch, a historically popular framework, and even surpassed Llama.cpp, the well-known LLM inference codebase it is built upon.

Security Alert: Vulnerabilities in Ollama

Our security research team has compiled information on multiple critical security vulnerabilities in the Ollama framework from various sources. These vulnerabilities range in severity from moderate to high, and some remain present even in the most recent patch. All identified vulnerabilities pose significant business risks to organizations deploying AI models. Organizations using Ollama in production environments should take immediate action to assess their exposure and implement appropriate safeguards.

Understanding the Vulnerabilities in Detail

The vulnerabilities we’ve investigated can be categorized into two main types, each with distinct attack vectors and potential impacts:

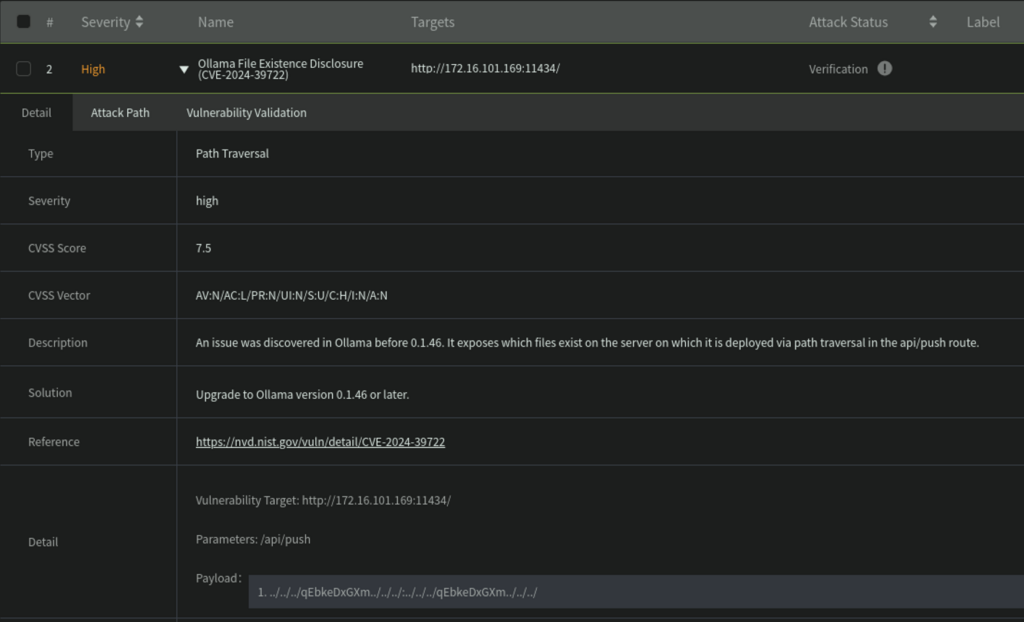

File Disclosure Vulnerabilities (CVE-2024-39722 and CVE-2024-39719): These flaws allow attackers to enumerate files on your server through Ollama’s API/Push and CreateModel routes. Attackers can methodically map your server’s directory structure without authorization, enabling more targeted follow-up attacks by revealing your system’s configuration. Versions prior to 0.1.46 remain vulnerable to this attack pattern.

Model Security Vulnerabilities: Model poisoning occurs when attackers introduce compromised models into your system when downloading from untrusted sources. Equally concerning is model theft, where proprietary models can be exfiltrated to external servers without authorization. These attacks potentially expose intellectual property and competitive advantages. Organizations using custom or fine-tuned models face particularly high risk given the potential business impact of intellectual property loss.

These vulnerabilities are especially concerning given Ollama’s growing adoption in enterprise environments where sensitive data and proprietary models may be processed.

Vulnerability detection with Ridgebot

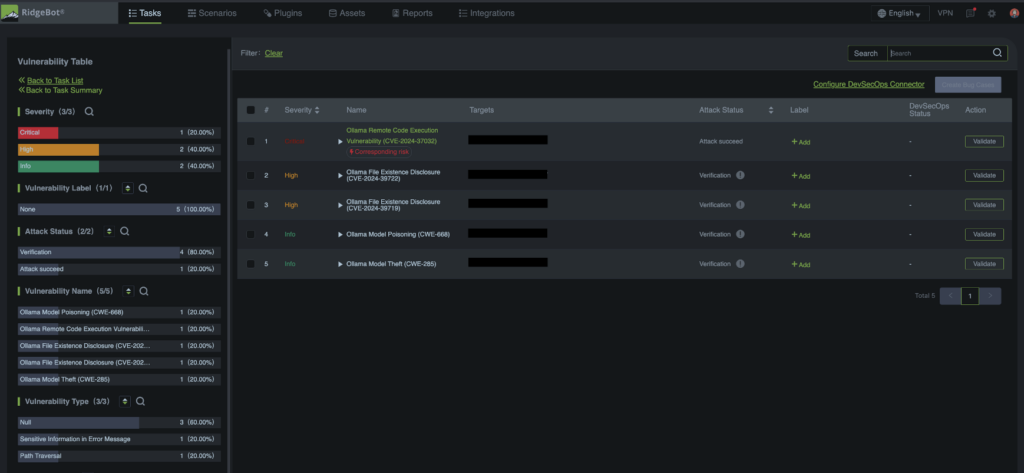

Ridge Security’s RidgeBot provides AI-powered automated testing that can quickly identify these vulnerabilities across your entire network infrastructure. Our specialized scanning modules detect Ollama installations and determine if they’re susceptible to the identified vulnerabilities without disrupting your operations. When scanning for these issues, RidgeBot takes a non-invasive approach to verify the vulnerabilities with real payloads. Once vulnerabilities are discovered, RidgeBot provides comprehensive reports including the vulnerability type, severity, description, and detailed remediation steps.

Fig 1: Ollamma vulnerabilities found by RidgeBot

Fig2: Vulnerability report

Why This Matters

The security of AI systems is becoming increasingly important as more organizations adopt this technology. The rapid growth of Ollama demonstrates organizations’ desire for accessible AI solutions, but this accessibility must be balanced with appropriate security measures.

Our team is committed to supporting the identification and remediation of vulnerabilities in AI systems to help protect your organization’s valuable assets and data.